What is Tokenization and How is it used in AI?

When working with LLMs and other NLP models we often hear about tokens and tokenizers. In this post we define and discuss the major topics needed to understand tokenization and how it is used in the context of AI.

What is a Token?

Tokens are the individual units of data used by different NLP models (including LLMs) for input, output, and training. Tokens will often represent entire words, but it is possible for a token to be any sequence of bytes and/or characters. This means that a token could be a single character, multiple characters, multiple words, multiple bytes, multiple sentences, etc. The logic of how larger text is broken down into tokens is determined by the tokenizer.

What is a Tokenizer?

The primary job of a tokenizer is to merge and/or split data (such as text) into "tokens" and map these tokens to a "vocabulary" which can then be fed to a model such as Llama or ChatGPT.

What is Vocabulary?

A tokenizer's vocabulary is the set of tokens the model understands. Each token in a vocabulary is associated with an ID. The token IDs from an input text are used for model training and inference. Vocabularies are often stored as a part of a tokenizer in a separate file. A good vocabulary example is the the GPT-2 vocab.json file.

How is a Vocabulary Created?

Building a vocabulary starts with a corpus, which can be thought of as a collection of texts. These can include dictionaries, books, websites, social media posts, technical documents, legal documents, spoken language (converted to text), etc.

With this corpus a tokenizer can apply the logic (algorithm) needed to create a vocabulary. A simple word based tokenizer might split our corpus on spaces and make each split a token in our vocabulary. In practice most tokenizers use different techniques optimize vocabulary for efficiency and effectiveness. We discuss some of these approaches below.

What is OOV?

OOV stands for "Out of Vocabulary". This means that a token encountered during training or inference is not found in the tokenizer vocabulary. Generally there is a placeholder in the vocabulary that exists for unknown tokens, often seen as [UNK] or <unk>. Any unknown tokens found during training or inference will be mapped to this unknown placeholder ID.

Word Based Tokenizers

Perhaps the simplest type of tokenizer to conceptualize is a word based tokenizer where text is split on spaces to create word tokens.

"Hello World!" -> ["Hello", "World!"]

Word based tokenizers will often have rules around punctuation. For example splitting strings on spaces and punctation could look like this:

"Hello World!" -> ["Hello", "World", "!"]

Pros and Cons of Word Based Tokenizers

Word based tokenizers are intutive, easy to implement, and result in easy to read tokens. Word based tokenizers often require fewer input and output tokens than other approaches. This can mean lower costs (e.g. ChatGPT) and faster training and inference if the vocabulary stays small. Word based tokenizers can be useful for cases where the vocabulary will be small and fixed.

Word based tokenizers for LLMs often result in very large vocabularies. Large vocabularies can:

- Reduce a model's ability to generalize especially when dealing with rare words

- Make it more difficult to learn relationships for words with many forms (e.g. "run", "running", "runner")

- Increase GPU memory requirements

- Slow down model training and inference (increased softmax/probability computations)

- Increase risk of OOV during inference and training (slang, mispellings, etc.)

While still useful for many NLP applications, word based tokenizers are generally not used with modern LLMs.

Character Based Tokenizers

Character based tokenization means that every character is converted into a token. For example "Hello World!" will be coverted into 12 tokens.

"Hello World!" -> ["H", "e", "l", "l", "o", " ", "W", "o", "r", "l", "d", "!"]

Pros and Cons of Character Based Tokenizers

Character based tokenization will result in much smaller vocabularies since each word can be built from character tokens. This also means it can handle unexpected words like slang and misspellings without having OOV concerns. For fine grained use cases like OCR character based tokenizers can be a good fit.

Character based tokenization will also require longer sequences (more tokens) when interacting with a model. This can slow down training and will reduce the number of words (represented as tokens) that will fit in an LLM context window. It is also harder for models to learn meaningful relationships between words when each token represents a single character.

Subword Tokenizers

Subword tokenization is a popular hybrid approach between word and character tokenization and is used by large models such as BERT and T5. The main idea behind subword tokenization is that common words remain whole, but rare and complex words will often be broken down into meaningful parts. An example of subword tokenization can be seen with "OpenAI", where one word can be represented by two tokens.

"OpenAI" -> ["Open", "AI"]

Pros and Cons of Subword Tokenizers

Subword tokenization helps keep vocabulary sizes smaller than word based tokenization as well as reduces the lengths of model input and output token sequences versus character based tokenization. This approach can also reduce the number of OOV words we encounter since unknown words can often be broken down into tokens found in the vocabulary.

Subword tokenization does require more processing to create a vocabulary using an algorithm like BPE or WordPiece. It is also more computaionally expensive during inference. Subword tokenizers can also split words on unituitive boundaries, making it harder for models to learn relationships between some words. For example the tokenization of "untie" in with the T5 model tokenizer.

"untie" -> ["un", "t", "i", "e"]

Byte Based Tokenizers

Byte based tokenizers will operate on raw byte sequences instead of words or characters. A byte based tokenizer vocabulary starts with all individual bytes (0-255), and will add other commonly occuring byte sequences to the vocabulary using an algorithm like BPE or WordPiece. Many LLM models including GPT-4, Llama, Mistral, and Claude seem to be using byte based tokenization.

Pros and Cons of Byte Based Tokenizers

Since byte based tokenization operates on raw bytes, it supports all languages, scripts, and special characters, and will never encounter an OOV token. Byte based tokenization also reduces the lengths of model input and output token sequences versus character based tokenization (similar to subword tokenization).

Byte based tokenization does require more processing to create a vocabulary using an algorithm like BPE or WordPiece. It is also more computaionally expensive during inference. Just like subword tokenization, it can also split words on unintuitive boundaries making it harder for models to learn relationships between some words. For example the tokenization of "Autobahn" in GPT-4.

"Autobahn" -> ["Aut", "ob", "ahn"]

Can I use any Tokenizer with an LLM?

Unfortunately no. If an LLM or similar NLP model was trained using a specific tokenizer, that same tokenizer must be used during inference. Otherwise we will break the model’s ability to understand input. Different tokenizers will have different vocabulary sizes and rules, making them incompatible. Switching tokenizers would require retraining the model.

Non-Text Tokenization?

Generally tokenization implies the use of text data, but the concept of tokens and tokenizers also exists for non-text data. For different modalities the idea of tokenization will be applied differently. For example some computer vision models will use "vision transformers" to split images into "patches" for training and inference.

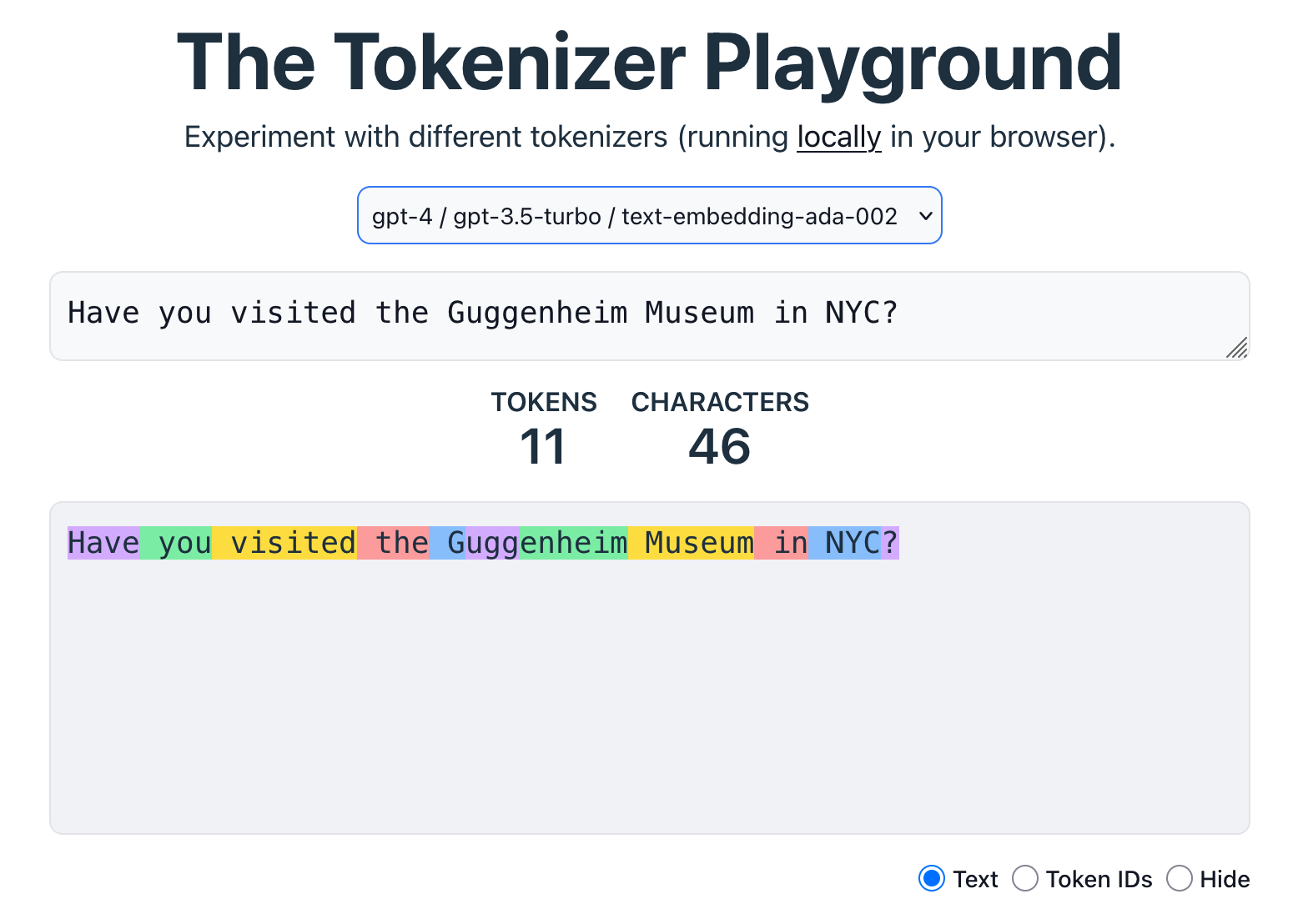

Test it Out!

Interested in seeing how your own input will be tokenized? Both the Tokenizer Playground and OpenAI's Tokenizer page allow us to see tokenization in action. These pages will show us both token boundaries as well as the vocabulary IDs that will be used an input to a model.